In the world of software, performance plays a key role. It affects user experience, customer satisfaction, and even overall business profitability. This is especially true for PHP applications, which are renowned for their simplicity and flexibility, but can face performance issues if not optimized.

In this article, we'll dive into the world of PHP application optimization. We will look at how you can improve the performance of your code without jeopardizing its readability or functionality. We'll discuss a variety of strategies and approaches, ranging from elementary best practices such as outsourcing computation and caching to more complex topics such as micro-optimization and sequence optimization.

Before we begin, however, it's worth reminding ourselves that optimization is not always a panacea. It's important to remain realistic and realize that not all performance improvements can have a noticeable impact on the overall efficiency of your application. Consequently, it is best to focus on those code sections that can really bring noticeable results.

When thinking about robust software, the first thing that comes to mind is the use of efficient code. Through optimizations, we create algorithms that perform the same task at a significantly lower cost. This thought is, in a sense, the foundation of quality programming.

We can no longer imagine everyday life without software, and we don't want to change that. However, we should try to use as few resources as possible to get the same result.

Algorithms are a foundation in basic software developer training. But, put your hand on your heart - how often do you still write yourself, for example, a sort function? Exactly, almost never. Much more often we rely on existing functions that PHP offers us. This is reasonable, because these functions are tried and tested, and we usually can't write faster functions.

But even if we don't usually write such specialized algorithms ourselves, there are still plenty of other bugs that can sometimes significantly affect performance. Especially when it comes to huge loops, even small performance losses can have a big impact on the system.

Unfortunately, time and time again it turns out that even basic best practices are not being applied:

- Outsourcing computation: are there operations that don't need to be performed on every loop pass? If so, perform the operation once and store the result in a variable.

- Caching: it doesn't always have to be Redis, even simple in-memory caching using an array can be very useful to avoid repeated database queries, etc.

- Reasonable micro-optimization: Performance improvements such as using single quotes instead of double quotes have only a minor impact on performance, but in performance-critical parts of code they can make a small difference. A good overview can be found in The PHP Benchmark, for example.

- Sequence Optimization: Even small changes to the program flow can increase speed, for example by positioning conditions in if-requests based on execution speed. Conditions that are not computationally intensive should be checked first.

For all the love of optimization: stay realistic! If you spend a few hours making minimal improvements to a script that rarely runs, it's unlikely the business will make enough money from it.

In accordance with Donald Knuth's famous saying "Premature optimization is the root of all evils", it's better to take care of those code sections that have a noticeable impact on performance first.

Databases

Relational databases are also a common source of application performance. You can tackle this with the following basic rules of thumb:

- Optimize tables: indexes, in particular, are often forgotten because their absence is only noticed when data volumes are large, as they are in large systems, due to performance degradation.

- Save data: don't query unnecessary data, i.e. avoid SELECT * if only a few columns are required, and pay attention to sensible LIMIT instructions.

- Use alternatives: Relational databases are not the best choice for storing large amounts of unstructured data (e.g., logs) because write access to them is expensive and should be avoided. Use NoSQL alternatives for this purpose, which do a much better job.

Thanks to object-oriented programming (OOP), database abstractions (DBAL), and object-relational mapping (ORM), many queries are hidden deep beneath layers of code that are several meters thick and difficult to understand. Especially when it comes to queries created with tools such as Eloquent or Doctrine. You should be very careful here, because otherwise you end up with the same queries being executed several times per thread.

Therefore, you should make it a habit to log all database queries on your local development system, and review these logs regularly. Tools such as the PHP Debug Bar can be easily integrated into existing applications and show how many requests are being sent per page opening or how fast they are executing. You can also use a logging tool to identify particularly slow requests. Familiarize yourself with the EXPLAIN statement to find missing indexes.

Convenience plays a big role here, of course. It's convenient to get an array or collection of ready initialized objects in one call. After all, we are using OOP, and all other ways are stone age, aren't they? But people overlook the fact that even the best ORM has overhead, and constructor libraries sometimes issue low-performance queries.

It's very bad when the result of a query contains many records, because then a separate object is instantiated for each row. If we have to iterate over thousands of objects, we should not be surprised that query execution takes longer.

For large amounts of data, using SQL queries directly, such as through PDO, is often a smarter choice. There is nothing wrong with using well-crafted SQL queries and simple arrays, as long as we carefully encapsulate this in a repository, for example. It's not just the system that benefits from improved performance, but the users as well.

Identifying bottlenecks

Optimization only makes sense if we don't just go into infinity, but take measurements and compare the results. We don't even need special tools for this. For example, if we want to know which is better - str_contains or preg_match - for searching for a substring, we can quickly find out on our own:

<?php

declare(strict_types=1);

namespace App\Console\Commands;

use Illuminate\Console\Command;

final class CompareStringsCommand extends Command

{

protected $signature = 'compare:strings';

protected $description = 'Comparing performance of different commands';

public function handle(): int

{

$start = microtime(true);

for ($i = 0; $i <= 200000; $i++) {

str_contains('Is there are any word Sergey here?', 'Sergey');

}

$end = microtime(true);

$this->info( "result of str_contains(): ". sprintf('%.4f', $end - $start). "\n");

$start = microtime(true);

for ($i = 0; $i <= 200000; $i++) {

preg_match("/php/i", 'Is there are any word Sergey here?');

}

$end = microtime(true);

$this->info( "result of preg_match(): ". sprintf('%.4f', $end - $start). "\n");

return 1;

}

}

The above command in Laravel is run via php artisan compare:strings and gives us the following result:

result of str_contains(): 0.0042

result of preg_match(): 0.0089

Absolute numbers don't matter here, but as a ratio it becomes obvious that str_contains is clearly faster in this particular case. This is not surprising, since preg_match is too complex for this simple task. But it also shows that the choice of means should be well thought out. A single call may not matter, but in large numbers it does.

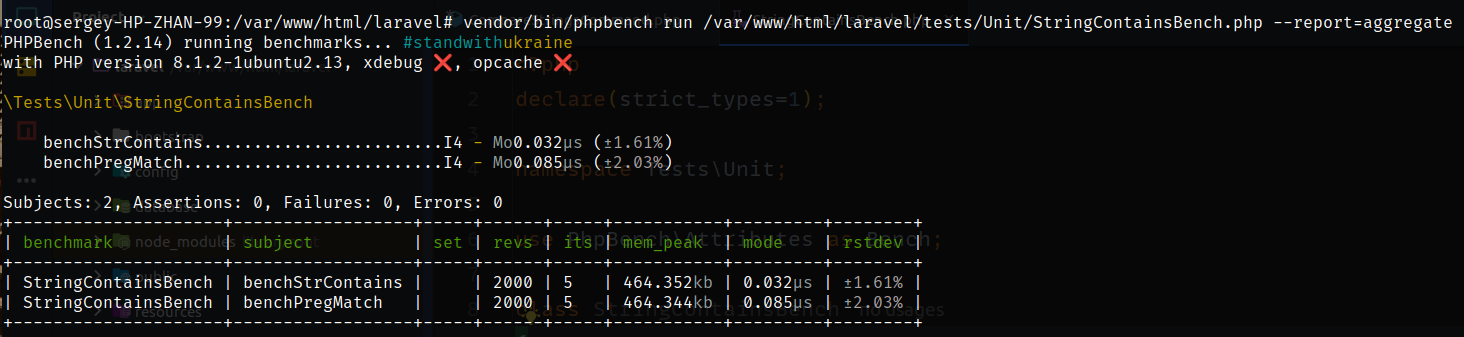

By the way, as the picture to the post shows, comparing performance using the PHPBench tool gives us more visual and accurate metrics. The test class has a very simple structure

<?php

declare(strict_types=1);

namespace Tests\Unit;

use PhpBench\Attributes as Bench;

class StringContainsBench

{

#[Bench\Revs(2000)]

#[Bench\Iterations(5)]

public function benchStrContains(): void

{

str_contains('Is there Sergey string here?', 'Sergey');

}

#[Bench\Revs(2000)]

#[Bench\Iterations(5)]

public function benchPregMatch(): void

{

preg_match("/Sergey/i", 'Is there Sergey string here?');

}

}

The #[Bench\Revs] and #[Bench\Iterations] attributes define how often measurements should be repeated to avoid interference in the evaluation. Please refer to the project documentation for more details. Measuring the performance of such small code fragments makes sense only if we have a rough idea of what fragments may be problematic. Debuggers and profilers are useful tools for finding them.

Perhaps the most famous debugger in the PHP world is Xdebug. With its help we can execute a program line by line. The tool is actually used for debugging, but by executing our code step by step, debugging step by step, we already get a first idea of which code sections are running particularly slow.

Even more precise analysis can be performed with the help of a profiler. It records each function call during program execution with its duration and thus can create a detailed picture of internal work. Maybe a slow function is called especially often or there is a loop somewhere that you didn't even think about?

By the way, Xdebug comes with the profiler. If it is activated, a cachegrind file is created for each run. However, you need another tool to read it. If your IDE does not support this format, there are tools like KCacheGrind or QCacheGrind.

Under full load

In local development mode, only small datasets are usually used for testing. Thus, many bottlenecks remain undiscovered until they are discovered by customers who, for example, want to import hundreds of thousands of rows of CSV data instead of a few dozens of rows. Commercial tools like NewRelic, Blackfire or Tideways measure the performance of complex systems and get into the fine details. They can provide valuable clues about exactly where problems are occurring.

However, these tools come at a cost, so they are often neglected. When customers encounter real performance problems, they are feverishly purchased. But they would be much more useful if they were built into the system from the start.

Thick libraries

Packages should be chosen with care because they tend to suffer from "fichuritis": having absorbed countless features over the years, they are often overloaded with features - and thus become too heavy.

A case in point is the widely used Nesbot/Carbon. The name itself is, of course, very fitting for the subject matter, but it also illustrates how best not to do things. Carbon claims to provide a simple API for PHP's own date functions. Perhaps this is also a matter of personal taste. We don't want to argue about taste, but we are talking about performance - and here Carbon performs significantly worse, as the code below shows, again using PHPBench.

<?php

declare(strict_types=1);

namespace Tests\Unit;

use Illuminate\Support\Carbon;

use PhpBench\Attributes as Bench;

class CarbonDateBench

{

#[Bench\Revs(2000)]

#[Bench\Iterations(5)]

public function benchStrtotime(): void

{

strtotime('-30 days');

}

#[Bench\Revs(2000)]

#[Bench\Iterations(5)]

public function benchCarbon(): void

{

Carbon::now()->subDays(30)->getTimestamp();

}

#[Bench\Revs(1000)]

#[Bench\Iterations(5)]

public function benchDateTime(): void

{

(new \DateTime('-30 days'))->getTimestamp();

}

}

Here, we use three different methods to determine the timestamp of the date 30 days ago: the strtotime function, the DateTime object (both PHP-native), and Carbon. As we can see from the performance result, Carbon takes 16 times more execution time to accomplish the same task in 8 microseconds than the two alternatives in 0.5 microseconds each.

root@sergey-HP-ZHAN-99:/var/www/html/laravel# vendor/bin/phpbench run /var/www/html/laravel/tests/Unit/CarbonDateBench.php --report=aggregate

PHPBench (1.2.14) running benchmarks... #standwithukraine

with configuration file: /var/www/html/laravel/phpbench.json

with PHP version 8.1.2-1ubuntu2.13, xdebug ❌, opcache ❌

\Tests\Unit\CarbonDateBench

benchStrtotime..........................I4 - Mo3.240μs (±1.28%)

benchCarbon.............................I4 - Mo11.327μs (±1.32%)

benchDateTime...........................I4 - Mo4.297μs (±2.04%)

Subjects: 3, Assertions: 0, Failures: 0, Errors: 0

+-----------------+----------------+-----+------+-----+----------+----------+--------+

| benchmark | subject | set | revs | its | mem_peak | mode | rstdev |

+-----------------+----------------+-----+------+-----+----------+----------+--------+

| CarbonDateBench | benchStrtotime | | 2000 | 5 | 4.948mb | 3.240μs | ±1.28% |

| CarbonDateBench | benchCarbon | | 2000 | 5 | 5.409mb | 11.327μs | ±1.32% |

| CarbonDateBench | benchDateTime | | 1000 | 5 | 4.948mb | 4.297μs | ±2.04% |

+-----------------+----------------+-----+------+-----+----------+----------+--------+

Again, single calls don't cause problems, but in mass execution it certainly matters. And why even use a significantly slower function when the alternatives are just as good?

But things get even worse. Carbon takes up almost 5 MB of memory. This is largely due to the 180 language files in the Lang directory, which together take up 3.5 MB. As a rule, not all languages of the world are supported in the application, so, according to the most conservative estimates, our project may need only 500 kilobytes of language files. The remaining three megabytes of unused language files are part of each installation anyway.

Three megabytes doesn't seem like much. However, when you consider that about 200 thousand Carbon programs are currently installed daily, this causes over 200 terabytes of unnecessary network traffic per year. Therefore, in this case it makes sense to outsource the language files so that they are only downloaded when needed. Incidentally, the famous fzaninotto/Faker package was made from similar considerations and was not further developed by the original developer.

There may always be situations where the use of carbon is necessary for a particular task. However, whenever possible, you should try to do without it. For example, PHP's own date functions are often quite adequate and much faster, but perhaps not as convenient to use.

Perhaps there is a more compact alternative to the package? For example, Guzzle is also a very commonly used package. In comparison, Nyholm/PSR7, for example, has much less code and provides faster performance with a similar feature set.

So what is the first thing to do?

It's impossible to list all the ways to improve application performance in this article, but I'd still like to give you some food for thought:

- Lean APIs: send only the most necessary data in response. GraphQL APIs naturally have an advantage here by virtue of their principle, but you can also save data in RESTful APIs by providing additional endpoints for special cases where more data is needed. You can also give clients the ability to decide for themselves what data should be included in the response by using special query parameters. For example, a call to

GET /user?fields=id,last_name,first_name would instruct the user endpoint to provide only the id, last_name, and first_name fields. This should of course be implemented on the server side.

- Compression: gzip-compression for websites or APIs saves bandwidth and thus improves performance. Sounds trivial, but it is often forgotten.

- Process assembly: Don't forget to use caching for CI/CD pipelines, especially for Composer. This saves a lot of valuable compute time.

- Docker: containers often carry around unnecessary data. Containers based on lean Alpine Linux are several times smaller, and thus build faster.

- Composer: Remove unnecessary composer packages that are otherwise installed over and over again each time you build or update composer. Tools such as composer-unused can help with this.

Why all of this is necessary?

Unfortunately, the topic of performance is often treated as an afterthought. However, the impact of performance on costs, customer satisfaction, and business profits cannot be underestimated. Early performance monitoring can prevent the worst consequences. But once the code hits the prolongs, refactoring becomes not so easy. The worst part is probably explaining to a product manager why it takes another sprint to make already completed, tested and approved functionality usable under load.

In my career, I've encountered countless examples where even small improvements quickly resulted in more than ten times faster code.

Especially in the cloud, these performance issues can become costly as well. With autoscaling, i.e. automatically adapting the provided resources to the current load, these bottlenecks are quickly compensated for by increased processing power and at first go unnoticed, at least until someone takes a closer look at the computation. Thus, with good performance, the software can be financially beneficial as well.

In conclusion

Performance optimization is a complex process that requires a deep understanding of both the specific application and the general principles of programming and systems. However, the results of this process can be quite impressive. Less resource usage, faster task execution, improved user experience - all this lies within the reach of those who are ready to spend time and effort on optimizing their code.